Inside this article :

How to Deploy an Aks Cluster Using Azure Pipeline & Terraform? Step-by-Step Guide

Azure DevOps provides developer services for allowing teams to plan work, collaborate on code development, and build and deploy applications.

Azure Pipelines is a set of automated processes and tools that allows developers and operations professionals to collaborate on building and deploying code to a production environment. It automatically builds and tests code projects to make them available to others. Azure Pipelines combines continuous integration (CI) and continuous delivery (CD) to test and build your code and ship it to any target.

This blog explains how to deploy an Azure Kubernetes Service (AKS) cluster using Azure pipeline and Terraforms.

What is Infrastructure as Code (IaC)?

Infrastructure as code is an IT practice that manages an application’s underlying IT infrastructure through programming. With IaC, configuration files are created that contain your infrastructure specifications, which makes it easier to edit and distribute configurations.infrastructure-as-code tools includes AWS CloudFormation, ARM templates, Red Hat Ansible, Chef, Puppet and HashiCorp Terraform

Why Terraform?

Terraform is an infrastructure as code tool that lets you define both cloud and on-prem resources in human-readable configuration files that you can version, reuse, and share

Terraform uses a declarative configuration language known as HashiCorp Configuration Language, or optionally JSON. Terraform helps to deploy infrastructure across multi-cloud and certain areas of on-prem data centres.

Key Features of Terraform:

Declarative Language: Terraform uses the HashiCorp Configuration Language (HCL) to define infrastructure, which allows for reusable and shareable configurations.

Modules: Terraform modules help organize configurations, encapsulate resources, and enable code reuse, consistency, and best practices.

What are Terraform Modules?

A Terraform module is a collection of standard configuration files in a dedicated directory. Modules are intended for code reusability, In other words a module allows you to group resources together and reuse this group later, possibly many times.

- Modules helps in organising configuration

- Encapsulation Configuration

- Re- use configuration

- Provide consistency and best practices

Why Use Remote State in Terraform?

By default, Terraform stores information about the infrastructure in a state file (terraform.tfstate) in the local filesystem. Use of a local file makes Terraform usage complicated because each user must make sure they always have the latest state data before running Terraform and make sure that nobody else runs Terraform at the same time. With remote state, Terraform writes the state data to a remote data store, which can then be shared between all members of a team, also storing state files remotely can offer additional security. Terraform supports storing state in Terraform Cloud, HashiCorp Consul, Amazon S3, Azure Blob Storage, Google Cloud Storage, Alibaba Cloud OSS, and more.

Architecture Overview:

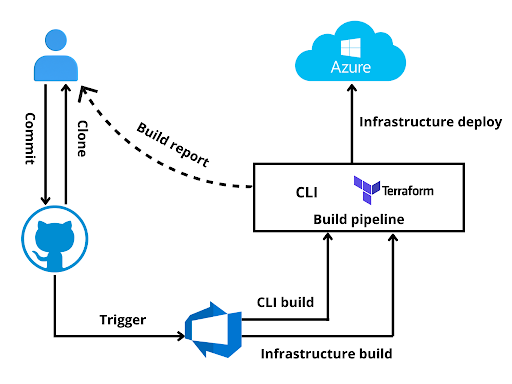

Deploying an AKS cluster in a custom Virtual Network using Terraform and the CI will be achieved using Azure Pipeline. This scenario will be focused on using GitHub as version control system and integrating it with Azure Pipeline.

Workflow:

A git push on the master branch will trigger the build, terraform will deploy the AKS cluster with the help of Azure DevOps Pipeline and will return the build report to the user.

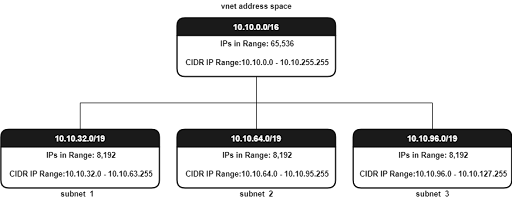

Azure VNET IP allocation

For ensuring the traffic flow to an AKS Cluster you can define one of two CNI’s which can either be kubenet or Azure CNI. Here we are using Azure CNI. With Azure CNI, every pod gets an IP address from the subnet and can directly communicate with other pods and services. In CNI each node has a configuration parameter that decides the maximum number of pods it can hold. So, an equivalent number of IP addresses are reserved upfront for that node. This demands more planning while creating the Virtual Network, otherwise it will lead to IP address exhaustion or need to rebuild the clusters in a larger subnet as your application demands grow.

Used tools

- GitHub

- Azure Cloud

- Terraform

- Managed Kubernetes

- Azure Pipeline

Steps to Deploy AKS Cluster using Azure Pipeline and Terraform

1. Create a GitHub repository:

The entire code will be kept inside the GitHub repository. Example: tf files and pipeline file.

2. Configure Azure Service Principal with a secret:

There are numerous scenarios where you want rights on Azure subscription but not as a user; rather as an application. Here comes the Azure service principal, this will provide access to the resources or resource group in Azure Cloud. For this deployment, we recommend using Service Principal.

3. Creating an Azure Service Principal with Contributor role:

az ad sp create-for-rbac --name "myApp" --role contributor \

--scopes

/subscriptions//resourceGroups/ \

--sdk-auth

Replace <subscription-id>, <resource-group> with the subscription id, resource group name (need to create a resource group). Using “–sdk-auth” will print the output that is compatible with the Azure SDK auth file.For more information

Note: The service principal also required Active Directory permission to read AD group information.

Setting up an Azure Devops Project and a Service Connection:

Azure DevOps project provides a platform for users to plan, tracking the progress, and collaborate on building software solutions. To create a project go to Azure DevOps > New Project > Create.

Azure resource manager service connection helps to deploy applications to Azure Cloud. There are different scenarios to create and manage a resource manager service connection. Here we are using Azure Resource Manager service connection with an existing service principal. For this, go to project settings > service connections (In Pipelines settings) > New Service Connections > Azure Resource Manager > Service Principal (Manual) > Create a service connection on the subscription (scope level) and provide appropriate credentials of the Service Principal that has been created in the previous step.

1. Getting Terraform files:

Checkout to a new branch and create tf files with respect to below tree structure.

.

├── azure-pipelines.yml

└── azure-tf

├── main.tf

├── sg-aks.tf

├── sg-resource-group.tf

├── sg-vnet.tf

├── terraform.tfvars

└── variables.tf

2. Terraform contents:

Terraform will deploy an AKS cluster on a custom Azure Virtual network along with a cluster resource group.

main.tf

terraform {

backend "azurerm" {

}

}

# Azure Provider Version #

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "2.99"

}

azuread = {

source = "hashicorp/azuread"

version = "2.21.0"

}

}

}

# Configure the Microsoft Azure Provider

provider "azurerm" {

features {}

}

sg-aks.tf

data "azuread_group" "admin-team" {

display_name = ""

}

module "aks" {

source = "Azure/aks/azurerm"

version = "4.14.0"

resource_group_name = azurerm_resource_group.sg_aks_rg.name

kubernetes_version = "1.22.6"

orchestrator_version = "1.22.6"

prefix = "${var.env}-${var.group}-${var.app}"

cluster_name = "${var.env}-${var.group}-${var.app}-cluster"

vnet_subnet_id = module.vnet.vnet_subnets[0]

network_plugin = "azure"

os_disk_size_gb = 50

sku_tier = "Paid" # defaults to Free

enable_role_based_access_control = true

rbac_aad_admin_group_object_ids = [data.azuread_group.admin-team.id]

rbac_aad_managed = true

//enable_azure_policy = true # calico.. etc

enable_auto_scaling = true

enable_host_encryption = true

agents_min_count = 1

agents_max_count = 2

agents_count = null # Please set `agents_count` `null` while `enable_auto_scaling` is `true` to avoid possible `agents_count` changes.

agents_max_pods = 100

agents_pool_name = "agentpool"

agents_availability_zones = ["1", "2", "3"]

agents_type = "VirtualMachineScaleSets"

agents_size = "Standard_B2ms"

agents_labels = {

"agentpool" : "agentpool"

}

agents_tags = {

"Agent" : "defaultnodepoolagent"

}

net_profile_dns_service_ip = var.net_profile_dns_service_ip

net_profile_docker_bridge_cidr = var.net_profile_docker_bridge_cidr

net_profile_service_cidr = var.net_profile_service_cidr

depends_on = [module.vnet]

}

Note: Replace <your-admin-group> with the name of your AD group (if present). Otherwise create an Active Directory Group and attach the users who wanted to have access to the AKS Cluster.

sg-resource-group.tf

resource "azurerm_resource_group" "sg_aks_rg" {

name = "${var.env}-${var.group}-${var.app}-rg"

location = var.region

tags = {

app = var.app

env = var.env

group = var.group

}

}

sg-vnet.tf

module "vnet" {

source = "Azure/vnet/azurerm"

version = "~> 2.6.0"

resource_group_name = azurerm_resource_group.sg_aks_rg.name

vnet_name = "${var.env}-${var.group}-${var.app}-${var.vnet_name}"

address_space = var.address_space

subnet_prefixes = var.subnet_prefixes

subnet_names = var.subnet_names

tags = {

env = var.env

group = var.group

app = var.app

}

depends_on = [azurerm_resource_group.sg_aks_rg]

}

terraform.tfvars

## Vnet Variables ##

address_space = ["10.10.0.0/16"]

subnet_prefixes = ["10.10.32.0/19", "10.10.64.0/19", "10.10.96.0/19"]

variables.tf

## Global Variables ##

variable "region" {

type = string

default = "uksouth"

}

variable "env" {

type = string

default = "poc"

}

variable "group" {

type = string

default = "devops"

}

variable "app" {

type = string

default = "aks"

}

## VNET variables ##

variable "vnet_name" {

description = "Name of the vnet to create"

type = string

default = "vnet"

}

variable "address_space" {

type = list(string)

description = "Azure vnet address space"

}

variable "subnet_prefixes" {

type = list(string)

description = "Azure vnet subnets"

}

variable "subnet_names" {

type = list(string)

description = "Azure vnet subnet names in order"

default = ["subnet1", "subnet2", "subnet3"]

}

## AKS Variables ##

variable "net_profile_service_cidr" {

description = "(Optional) The Network Range used by the Kubernetes service. Changing this forces a new resource to be created."

type = string

default = "10.0.0.0/16"

}

variable "net_profile_dns_service_ip" {

description = "(Optional) IP address within the Kubernetes service address range that will be used by cluster service discovery (kube-dns). Changing this forces a new resource to be created."

type = string

default = "10.0.0.10"

}

variable "net_profile_docker_bridge_cidr" {

description = "(Optional) IP address (in CIDR notation) used as the Docker bridge IP address on nodes. Changing this forces a new resource to be created."

type = string

default = "172.17.0.1/16"

}

3. Pipeline Setup:

Azure Pipeline will help to build and test the code automatically. Here, the trigger will be master.

azure-pipelines.yml

trigger:

- master

#variables:

# global_variable: value # this is available to all jobs

jobs:

- job: terraform_deployment

pool:

vmImage: ubuntu-latest

variables:

az_region:

resource_group_name:

subscription:

key_vault_name:

sa_prefix:

sa_container_name:

tfstateFile: terraform.tfstate

steps:

- task: AzureCLI@2

inputs:

azureSubscription: '' #replace with your service connection - azure resource manager service principal

scriptType: 'bash'

scriptLocation: 'inlineScript'

inlineScript: |

az group create -n $(resource_group_name) -l $(az_region)

VAULT_ID=$(az keyvault create --name "$(key_vault_name)" --resource-group "$(resource_group_name)" --location "$(az_region)" --query "id" -o tsv)

az storage account create --resource-group $(resource_group_name) --name "$(sa_prefix)" --sku Standard_LRS --encryption-services blob

az storage container create --name $(sa_container_name) --account-name "$(sa_prefix)" --auth-mode login

- task: TerraformInstaller@0

displayName: Terraform Installation

inputs:

terraformVersion: 'latest'

- task: TerraformTaskV3@3

displayName: Terraform Init

inputs:

provider: 'azurerm'

command: 'init'

workingDirectory: '$(System.DefaultWorkingDirectory)/tf-files'

backendServiceArm: ''

backendAzureRmResourceGroupName: '$(resource_group_name)'

backendAzureRmStorageAccountName: '$(sa_prefix)'

backendAzureRmContainerName: '$(sa_container_name)'

backendAzureRmKey: '$(tfstateFile)'

- task: TerraformTaskV3@3

displayName: Terraform Plan

inputs:

provider: 'azurerm'

command: 'plan'

workingDirectory: '$(System.DefaultWorkingDirectory)/tf-files'

commandOptions: '-out=tfplan'

environmentServiceNameAzureRM: 'akrish-poc-sp'

- task: TerraformTaskV3@3

displayName: Terraform Apply

inputs:

provider: 'azurerm'

command: 'apply'

workingDirectory: '$(System.DefaultWorkingDirectory)/tf-files'

commandOptions: 'tfplan'

environmentServiceNameAzureRM: ''

Replace variables in the pipeline with appropriate values. Add service connection name in the <service-connection-auth> field.

4. Integrate GitHub repo with Azure Pipeline:

Follow these steps to integrate it with Azure Pipeline, go to Azure Devops > Azure Pipelines > New Pipeline > Select code from GitHub > Authorize AzurePipelines > Authenticate and select repository > Approve and Install > select AD and project > choose existing yaml file with your branch.

Merging the branch with master will trigger the build…

Streamline Your Kubernetes Deployments with Azure DevOps and Terraform

Azure DevOps with Terraform is a great advantage for any organisation who are looking to modernise their cloud Infrastructure with the help of IaC, cloud services, cloud-based backend storage and automation pipelines.

Looking for help with your Devops or want help with your Devops implementation strategy? Reach out to us and see how we can help.

FAQ Suggestions

1. What are the key benefits of using Terraform with Azure Pipeline for AKS?

The combination allows for automated, consistent, and scalable infrastructure provisioning. It also provides version control for infrastructure changes, and automated deployment pipelines ensure faster and more reliable updates.

2. How do I scale my AKS cluster after deployment?

Terraform allows you to easily update your AKS configuration file (e.g., by increasing the node count) and re-apply it to scale your cluster. Azure Pipeline will automatically handle the changes during deployment.

3. Is it possible to use private GitHub repositories for this setup?

Yes, Azure Pipelines supports both public and private GitHub repositories for hosting Terraform files and pipeline configurations.

4. What are the key challenges when deploying AKS using Terraform and Azure Pipelines?

Some common challenges include proper IP allocation in Azure Virtual Networks, configuring Service Principals with adequate permissions, and handling state management for collaborative teams.