With savings of up to 20% when using Graviton instances, this how-to explainer will look at how simple it is to run both Graviton (ARM) and X86 instances on an EKS cluster.

Both architectures have its pros and cons, combining these two in well designed infrastructure we could achieve higher standards in terms of performance and cost. As for ARM processors, they are based on the RISC (Reduced Instruction Set Computer) architecture, which is much simpler than CISC (x86), x86 can perform complex calculations in less time as a result it need more power which means more heat. This is just only one difference, but there is more…

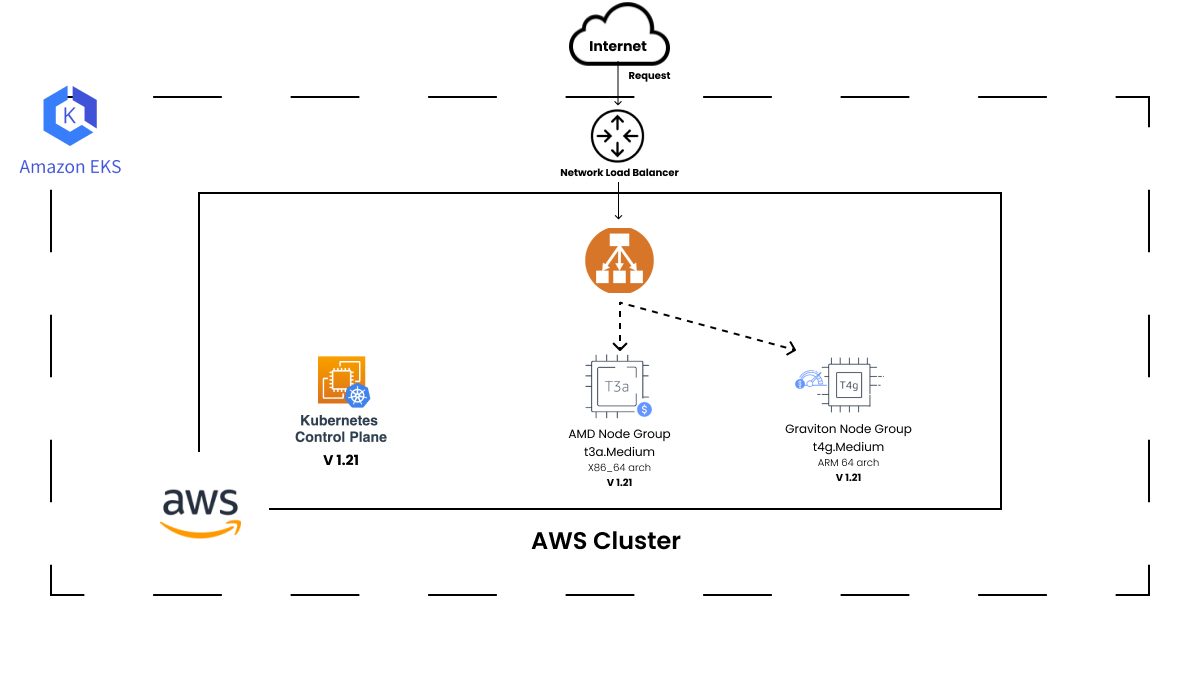

Following is a infrastructure setup using AWS Graviton and AMD EPYC..

Requirements

Name | Version |

AWS EKS Cluster | 1.21 |

Nginx Ingress | 1.2.0 |

Sockshop | – |

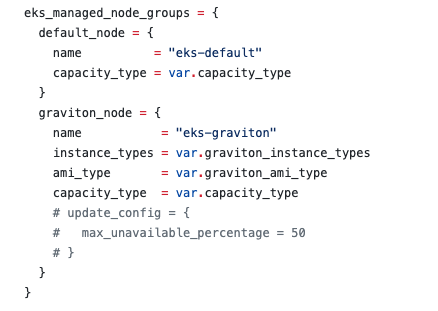

- First, create an EKS cluster with two node groups. Call one node group eks-graviton and the other eks-default.

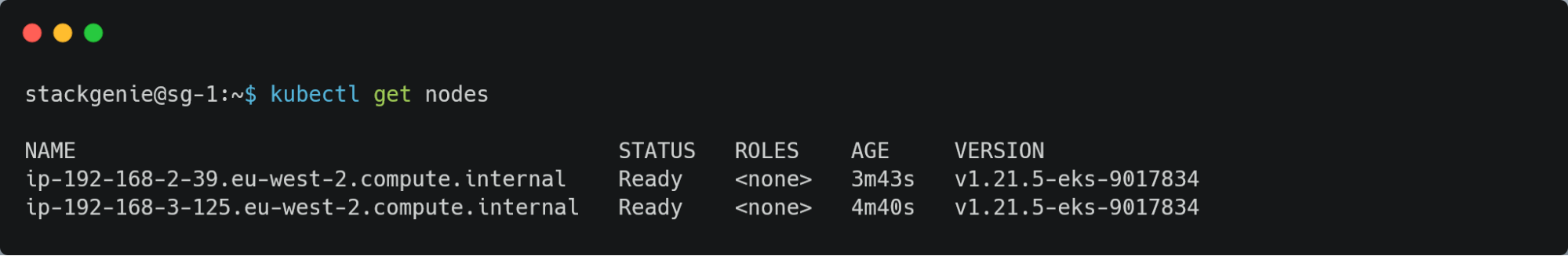

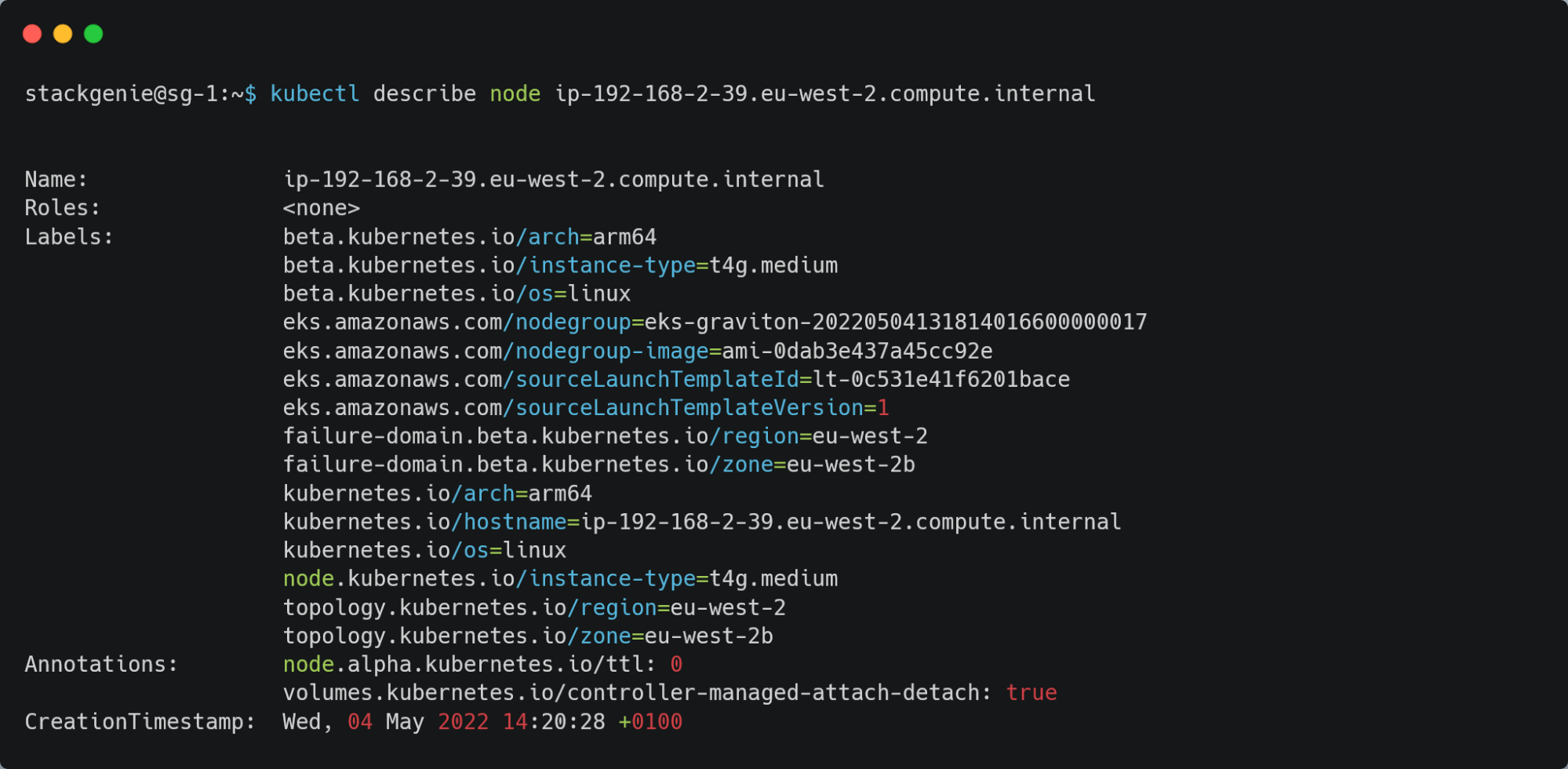

- Once the nodes are up, review the current setup.

- The nodes should show the following labels within the default node group:

- kubernetes.io/arch: amd64

- kubernetes.io/arch: arm64

Note: The above label on the nodes within the Graviton node group.

Deploying ingress and a sample web application.

High Level Architecture

Applying Nginx Ingress Manifest Into Cluster.

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.2.0/deploy/static/provider/aws/deploy.yaml

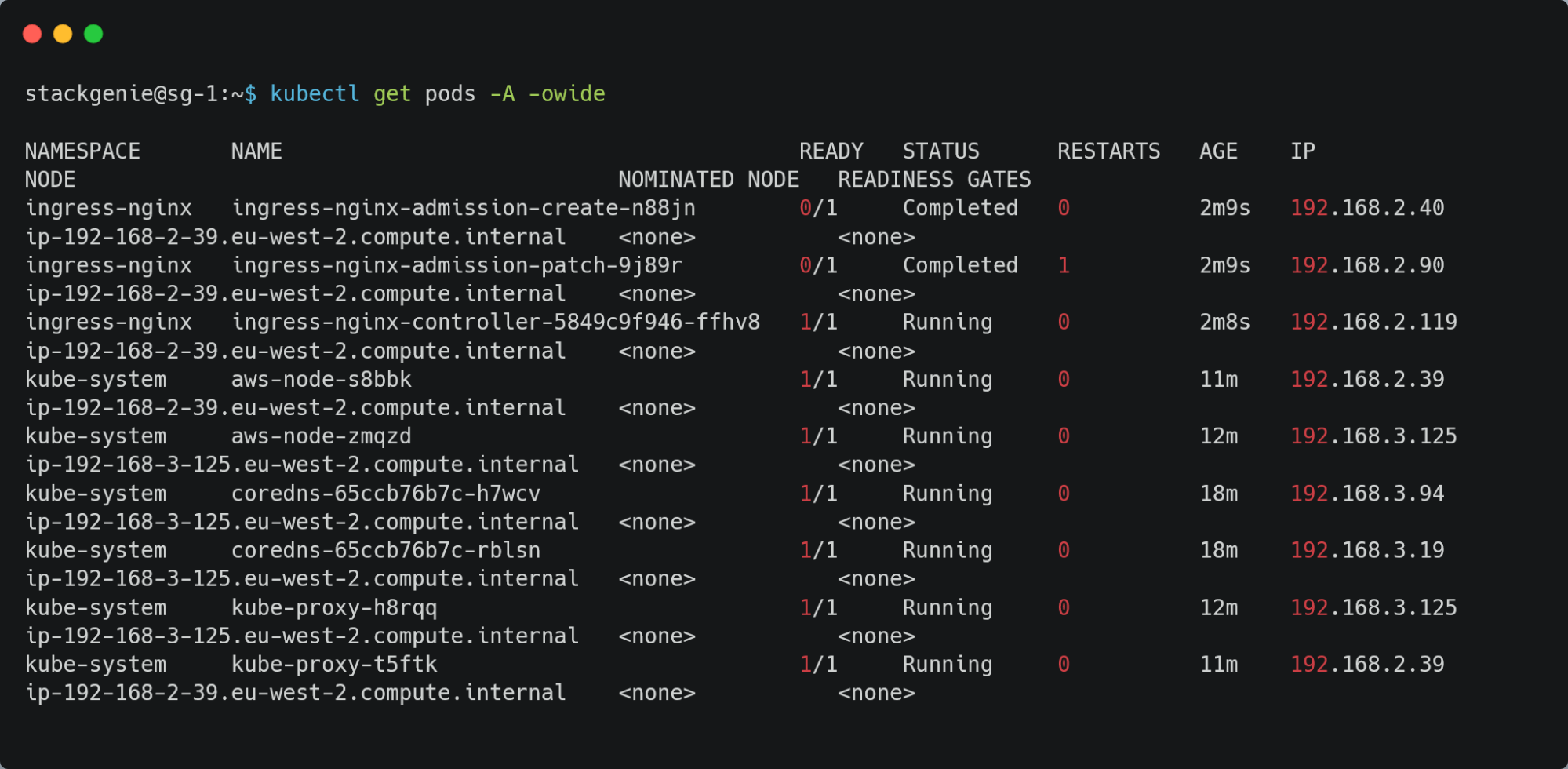

- Review where the pods have scheduled.

- The ingress controller is scheduled in a Graviton node.

Note: From deploying the nginx ingress, you can see the pods can be easily scheduled on the Graviton instances and you also have the ability to schedule them on the x86 instances.

Applications That Can Only Be Scheduled On X86 Architecture

$ kubectl apply -f https://raw.githubusercontent.com/microservices-demo/microservices-demo/master/deploy/kubernetes/complete-demo.yaml

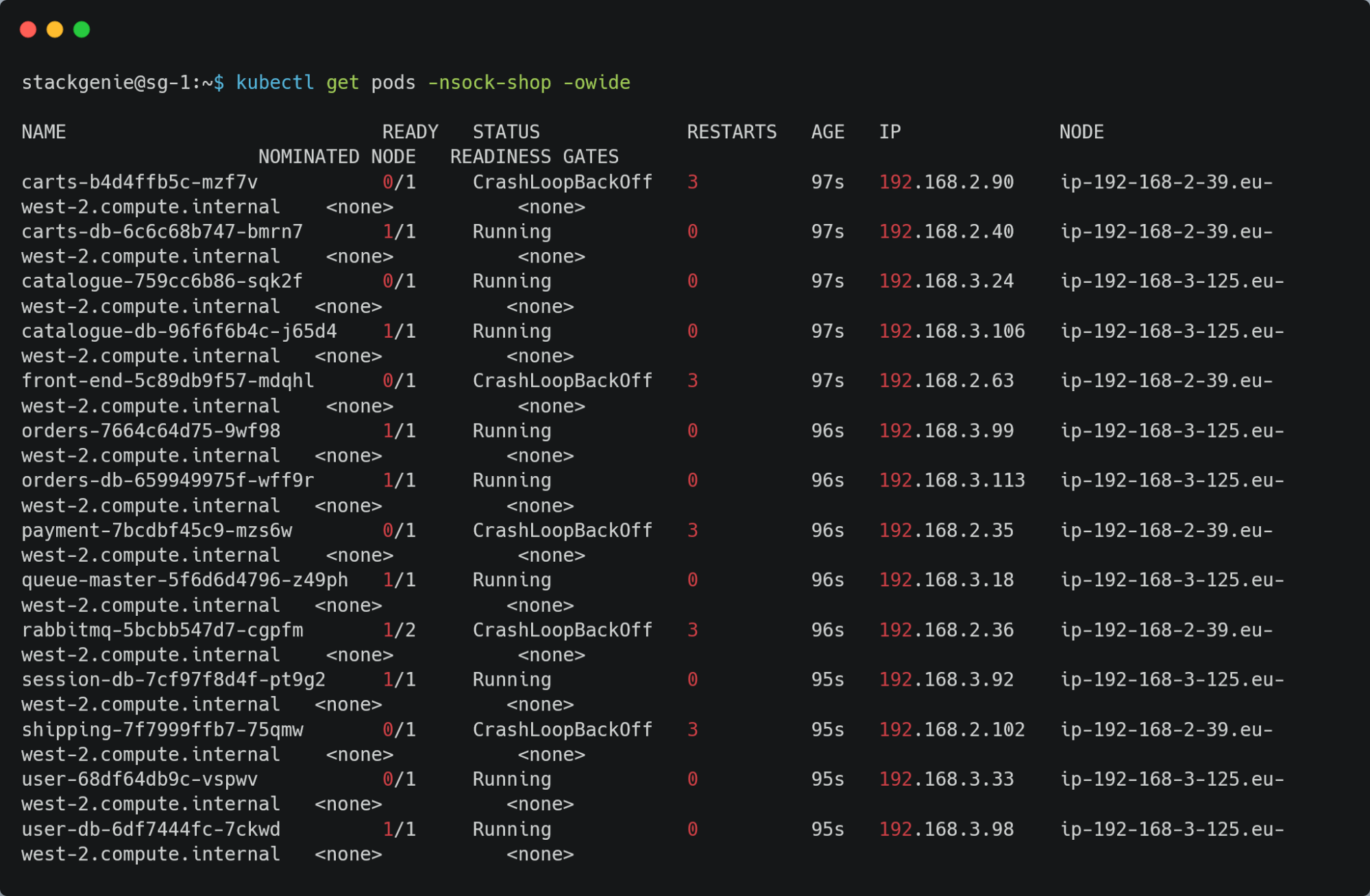

- There are times when an application can only be scheduled on x86 architecture and not on Graviton. Using this sockshop as an example of a modern day application architecture, you can see the error logs that occur when you try to schedule it on the Graviton instances.

- The error logs below are what you will see when the scheduler tries to schedule the pod on the Graviton instance.

$ kubectl logs -f carts-b4d4ffb5c-mzf7v -n sock-shop

standard_init_linux.go:228: exec user process caused: exec format error

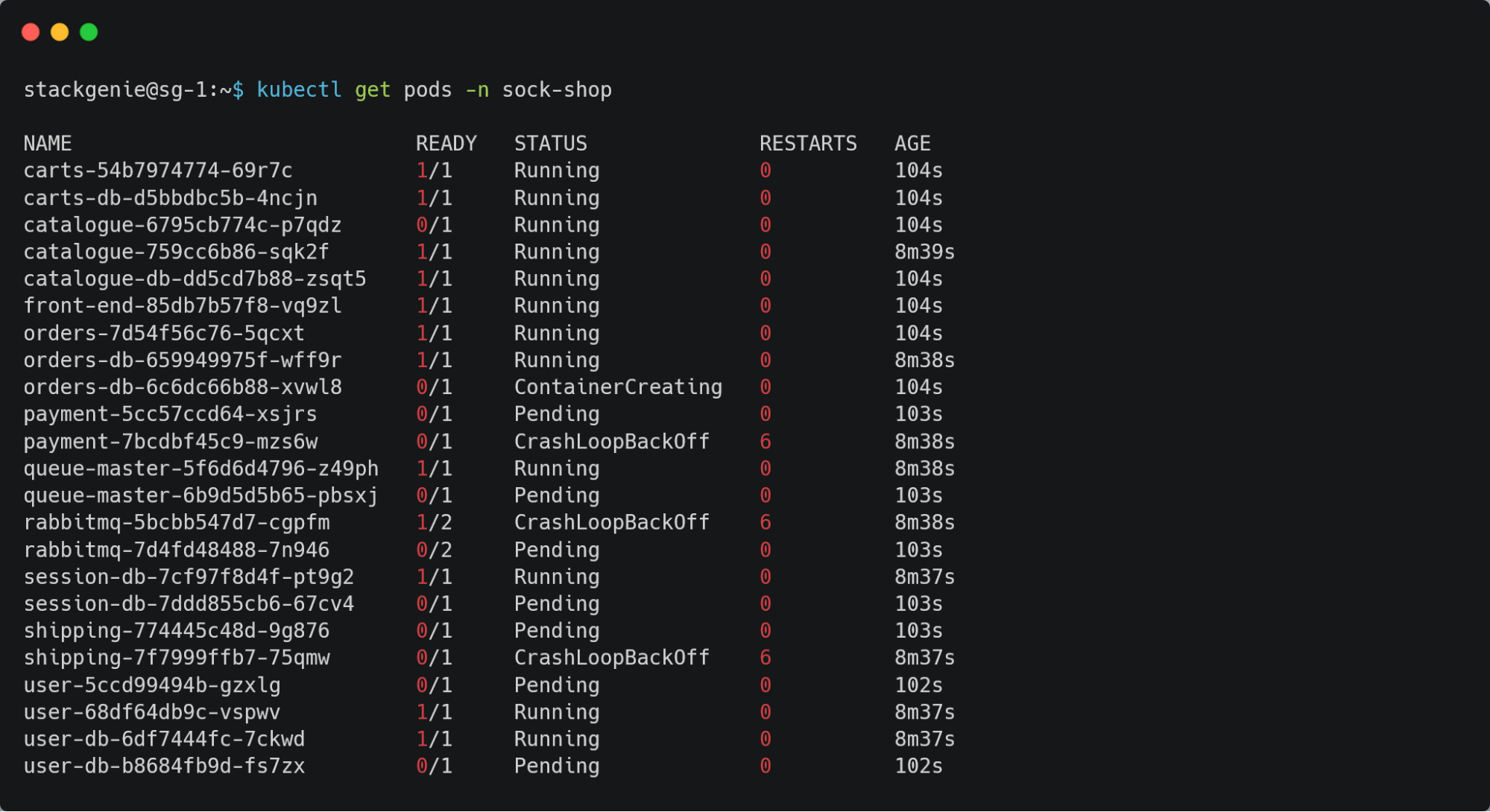

- As the application is running on a multi-architecture EKS cluster, you can simply choose to schedule the application in the x86 nodes by using a node selector and setting the architecture to be amd64.

nodeSelector:

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

- Now that these changes have been made, you can assess the state of the pods.

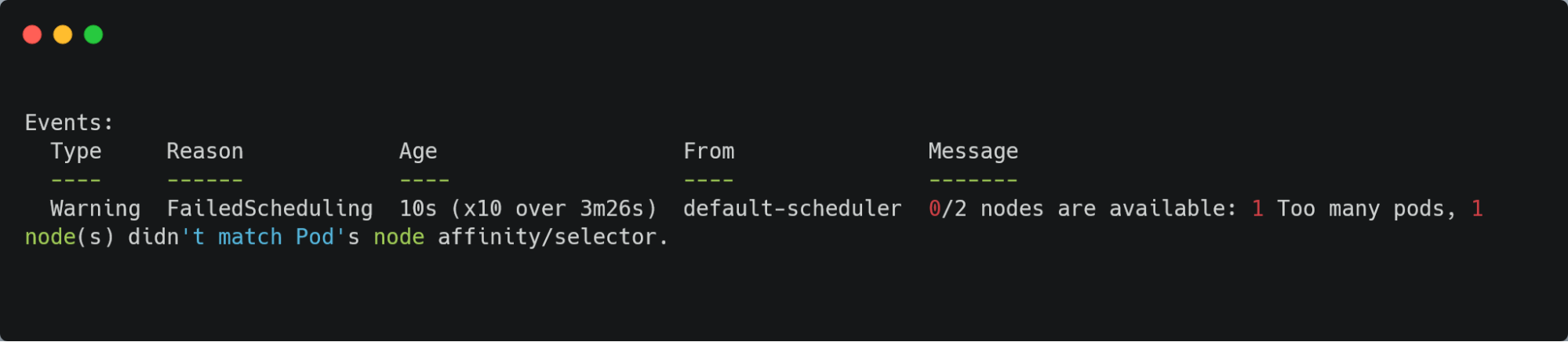

- Some pods are listed as ‘Pending’, for the following reasons:

Note: The sock shop application will support only amd64 architecture, here the pod is trying to schedule on the graviton node group that is using an arm64 processor.

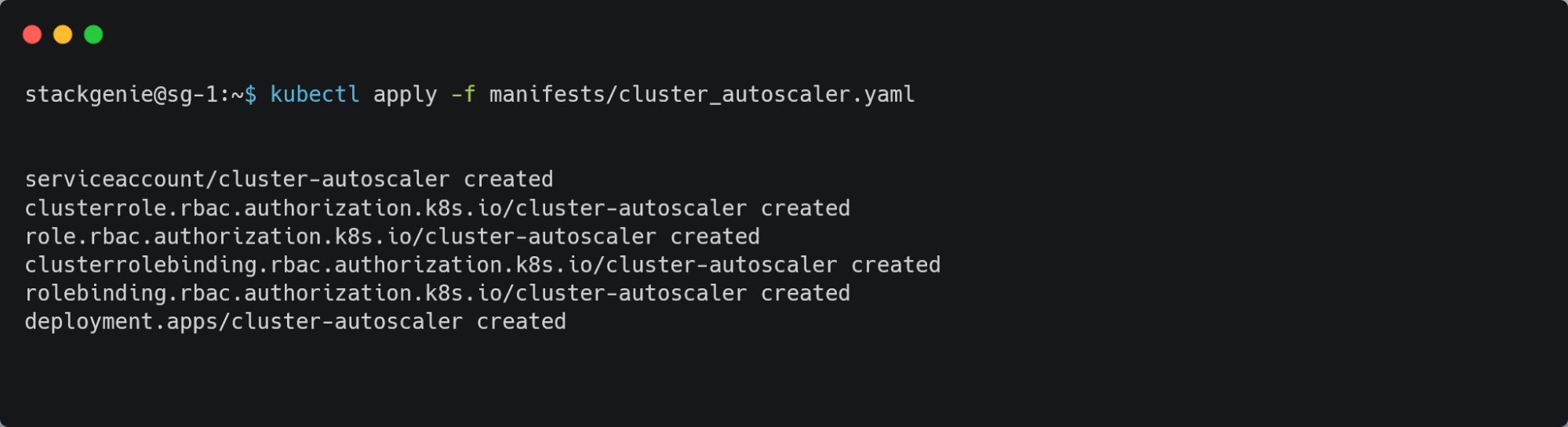

Resolution: Add A Cluster Autoscaler

- With too many pods and not enough nodes, you need to add a Cluster Autoscaler to the mix in order to bring up the necessary capacity in the right node group. Cluster autoscaler considerations.

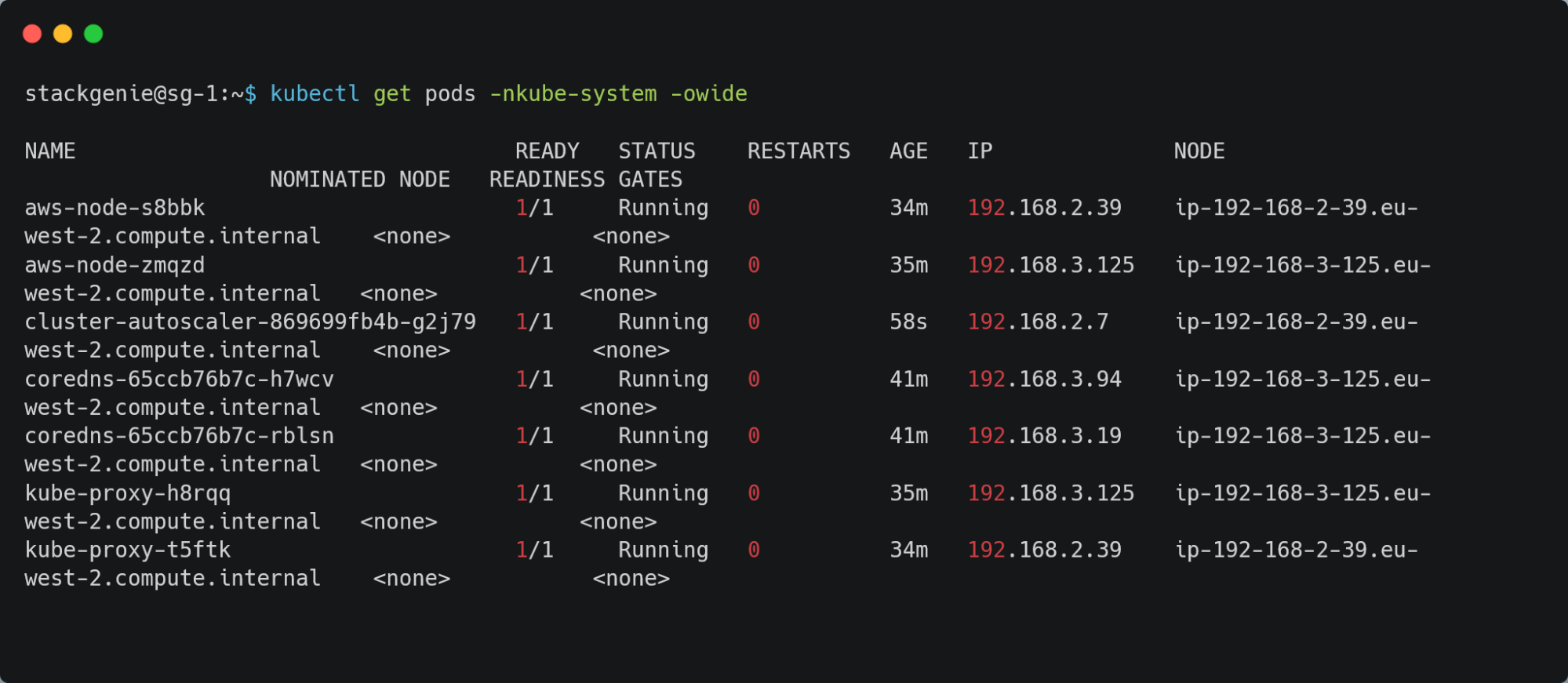

- Listing pods under kube-system namespace

- The Cluster Autoscaler is scheduled on the Graviton node and is running.

- A review of the logs above shows that the nodes in the default node group are being scaled to accommodate the unscheduled pods.

- A review of the architecture type in the new node shows…

- And finally, assessing the pods illustrates that…

Note: For EKS cluster autoscaler installation, please refer.

Conclusion

As you can see it’s quite easy to add a graviton node group to the cluster and use the popular tooling as long as the containers are in this list, it should work on both graviton and x86 architectures.

Looking for help with your Infrastructure or want help with your DevOps implementation strategy? Reach out to us and see how we can help.

- Once the nodes are up, review the current setup.