As more businesses move their applications to Kubernetes, the need for simple and reliable storage solutions is growing. Managing data storage in Kubernetes, especially for apps that require permanent data like databases or file uploads, can get complicated quickly.

Here, it enters Rook-Ceph—a powerful tool that takes the headache out of managing storage. It combines the strength of Ceph, a trusted storage system, with the simplicity of Rook, which makes everything easy to handle within Kubernetes.

This blog delves into the intricacies of Rook-Ceph, exploring its architecture, benefits, and how it simplifies storage management in Kubernetes environments and the implementation using helm charts.

What is Rook-Ceph?

Rook is an open-source project that brings file, block, and object storage systems into Kubernetes clusters. It acts as an operator to simplify the deployment and management of storage services within Kubernetes.

Ceph, on the other hand, is a highly scalable and robust storage solution that provides unified storage capabilities.

Rook-Ceph combines the strengths of both, enabling Kubernetes users to leverage Ceph’s powerful storage features with the ease of Kubernetes-native management.

Why Rook-Ceph?

Managing storage in Kubernetes can be complex and time-consuming, especially when scaling and ensuring data reliability. Rook-Ceph simplifies this by automating the deployment and management of storage, making it easier for teams to focus on their applications without worrying about storage infrastructure.

Here’s why Rook-Ceph is a valuable solution for Kubernetes environments.

-

Seamless Integration with Kubernetes

Rook-Ceph is designed to work natively within Kubernetes, making it easy to deploy, manage, and scale storage resources. It abstracts the complexities of Ceph, allowing Kubernetes users to focus on their applications rather than the underlying storage infrastructure.

-

Reliable Storage Solution

Ceph provides a unified storage platform that supports block, file, and object storage. With Rook-Ceph, users can manage all these storage types through a single interface, simplifying storage management and reducing operational overhead.

-

Scalability and Flexibility

Rook-Ceph is built to scale with your Kubernetes cluster. Whether you are running a small development environment or a large-scale production system, Rook-Ceph can grow with your needs. Its flexible architecture allows you to add or remove storage nodes dynamically, ensuring that your storage infrastructure can adapt to changing deman

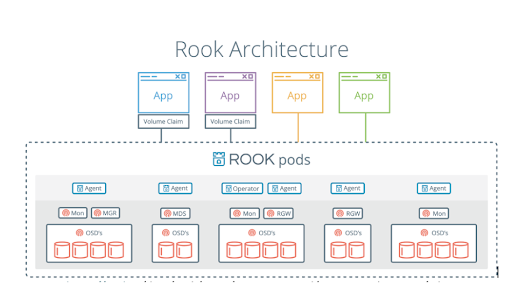

Architecture of Rook-Ceph

Source: https://rook.io/docs/rook/v1.10/Getting-Started/storage-architecture/#design

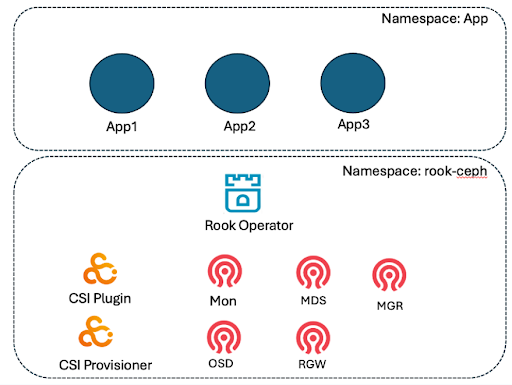

Rook-Ceph leverages Kubernetes operators to manage the lifecycle of Ceph clusters. The key components of Rook-Ceph architecture include:

-

Rook Operator

The Rook operator is a Kubernetes controller that automates the deployment, configuration, and management of Ceph clusters. It monitors the state of the cluster and performs necessary actions to ensure the cluster remains healthy and operational.

-

Ceph Cluster

Ceph is a distributed storage system that provides excellent performance, reliability, and scalability. The Ceph cluster consists of several components, including monitors (MONs), object storage daemons (OSDs), and metadata servers (MDS). These components work together to provide a distributed and resilient storage system. Below is an explanation of each key component’s function and job in Ceph.

Rook-Ceph Components: Functions and Roles

1. CSI Plugin

Function: The CSI (Container Storage Interface) plugin in Ceph provides a standardized way for Kubernetes to interface with the storage system. It enables Kubernetes to manage the lifecycle of storage volumes within the Ceph cluster.

Job:

- Volume Provisioning: Create and delete volumes on demand.

- Volume Attachment: Attach and detach volumes to/from containers.

- Snapshot Management: Create and manage snapshots of volumes.

- Volume Expansion: Resize volumes dynamically as needed.

2. CSI Provisioner

Function: The CSI Provisioner is a component of the CSI plugin that handles the dynamic provisioning of storage volumes.

Job:

- Volume Creation: Automatically create new volumes when PersistentVolumeClaims (PVCs) are made in Kubernetes.

- Volume Deletion: Delete volumes when they are no longer needed.

- Storage Class Management: Use storage classes to define different types of storage and their properties (e.g., replication, performance).

3. Mon (Monitor)

Function: Ceph Monitors are critical for maintaining the health and consistency of the cluster. They keep track of the cluster state, including the placement group maps, and provide the authority for cluster changes.

Job:

- Cluster Map Management: Maintain and distribute the cluster map, including OSD maps, monitor maps, and CRUSH maps.

- Cluster Health Monitoring: Continuously monitor the health of the cluster and detect failures.

- Authentication: Handle authentication between Ceph components.

4. MDS (Metadata Server)

Function: The Ceph Metadata Server (MDS) manages metadata for the Ceph File System (CephFS). It enables high-performance file operations by managing the namespace (directories and file metadata).

Job:

- Namespace Management: Manage the file system namespace and provide metadata services to clients.

- Load Balancing: Distribute metadata across multiple MDS instances for scalability.

- Cache Management: Cache metadata to improve performance for file operations.

5. OSD (Object Storage Daemon)

Function: Ceph OSDs are responsible for storing data and handling data replication, recovery, and rebalancing.

Job:

- Data Storage: Store and retrieve data objects.

- Data Replication: Ensure data is replicated across multiple OSDs to provide redundancy.

- Data Recovery and Rebalancing: Handle the recovery of data in case of OSD failure and rebalance data across the cluster to optimize space and performance.

- Heartbeat Monitoring: Regularly send heartbeat signals to monitors to indicate their health status.

6. RGW (RADOS Gateway)

Function: The Ceph RADOS Gateway (RGW) provides object storage interfaces compatible with Amazon S3 and OpenStack Swift.

Job:

- HTTP API Support: Provide a RESTful API for object storage operations, compatible with S3 and Swift APIs.

- Multi-Tenancy: Support multiple users and tenants with isolation and access control.

- Data Management: Manage object data, including storage, retrieval, and deletion.

- Integration: Integrate with other Ceph components for backend storage and replication.

7. MGR (Manager Daemon)

Function: The Ceph Manager (MGR) daemon provides additional monitoring and management services for the Ceph cluster.

Job:

- Cluster Metrics and Dashboard: Collect and expose various metrics about the cluster’s performance and health through a dashboard.

- Module Management: Host various management modules, such as those for orchestration, monitoring, and visualization.

- Coordination Services: Assist with coordination tasks that require a global view of the cluster, such as balancing and maintenance operations.

- API Services: Provide an API for external tools to interact with the Ceph cluster for management and monitoring purposes.

Rook-Ceph and Custom Resource Definitions (CRDs)

Rook-Ceph utilizes Kubernetes Custom Resource Definitions (CRDs) to define and manage Ceph resources within Kubernetes clusters. CRDs extend the Kubernetes API to allow for the creation of custom resources, which Rook uses to represent various aspects of a Ceph storage cluster.

6 Key CRDs in Rook-Ceph

1. CephCluster

This CRD defines the overall configuration and desired state of the Ceph cluster. It includes details such as the number of monitors, OSDs (Object Storage Daemons), and the configuration settings for the Ceph components. The Rook operator continuously monitors the CephCluster resource and ensures that the actual state of the Ceph cluster aligns with the desired state specified by the user.

2. CephBlockPool

This CRD manages the block storage pools in the Ceph cluster. Block pools are collections of storage resources that can be used to create block storage volumes for Kubernetes pods.

3. CephFilesystem

This CRD represents the configuration of a CephFS file system, which provides a POSIX-compliant file system interface. Users can define the metadata servers (MDS) and data pools associated with the CephFS.

4. CephObjectStore

This CRD defines an object storage gateway using the Ceph RGW (RADOS Gateway). It allows users to configure the object storage service, including settings for the data pools, the number of gateways, and the placement of the gateways

5. CephObjectStoreUser

This CRD manages user accounts for the Ceph object storage service. It specifies user credentials, access policies, and quota limits for the object storage users.

6. CephNFS

This CRD configures NFS (Network File System) servers backed by CephFS, allowing users to expose CephFS as NFS shares.

How CRDs Work in Rook-Ceph

-

Define the Desired State

Users define the desired state of their Ceph resources by creating CRD instances. These instances are YAML manifests that specify the configuration and properties of the Ceph resources.

-

Rook Operator

The Rook operator is a Kubernetes operator that continuously watches for changes to the CRDs. It takes the desired state specified in the CRDs and ensures that the actual state of the Ceph cluster matches this desired state. If there are any discrepancies, the operator takes corrective actions to reconcile the differences.

-

Reconciliation Loop

The Rook operator runs a reconciliation loop, where it periodically checks the current state of the Ceph resources and compares it with the desired state defined in the CRDs. If changes are needed, the operator updates the Ceph cluster accordingly.

Deploying Rook-Ceph Using Helm Chart

Prerequisite:

1. Minimum CPU: 6-8 vCPUs Memory: 12-16 GB

2. There should be additional disk which should not be partitioned or used for other workloads or applications.

Deployment Helm charts

The deployment consists of two helm chart deployments:

- Rook-Ceph Operator Helm Chart

- Rook-Ceph Cluster Helm Chart

A. Rook-Ceph Operator Helm Chart

The Rook-Ceph Operator Helm chart is designed to install the Rook operator and its associated resources. This chart sets up the necessary components to manage and orchestrate Ceph clusters within a Kubernetes environment.

Key Components of the Rook-Ceph Helm Chart:

- Rook Operator: The core component that manages the lifecycle of Ceph clusters by monitoring and acting on changes to Ceph CRDs.

- Custom Resource Definitions (CRDs): Defines the schema for the custom resources used by Rook to manage Ceph (e.g., CephCluster, CephBlockPool).

- RBAC (Role-Based Access Control): Configures the necessary roles and permissions to allow the Rook operator to function correctly within the Kubernetes cluster.

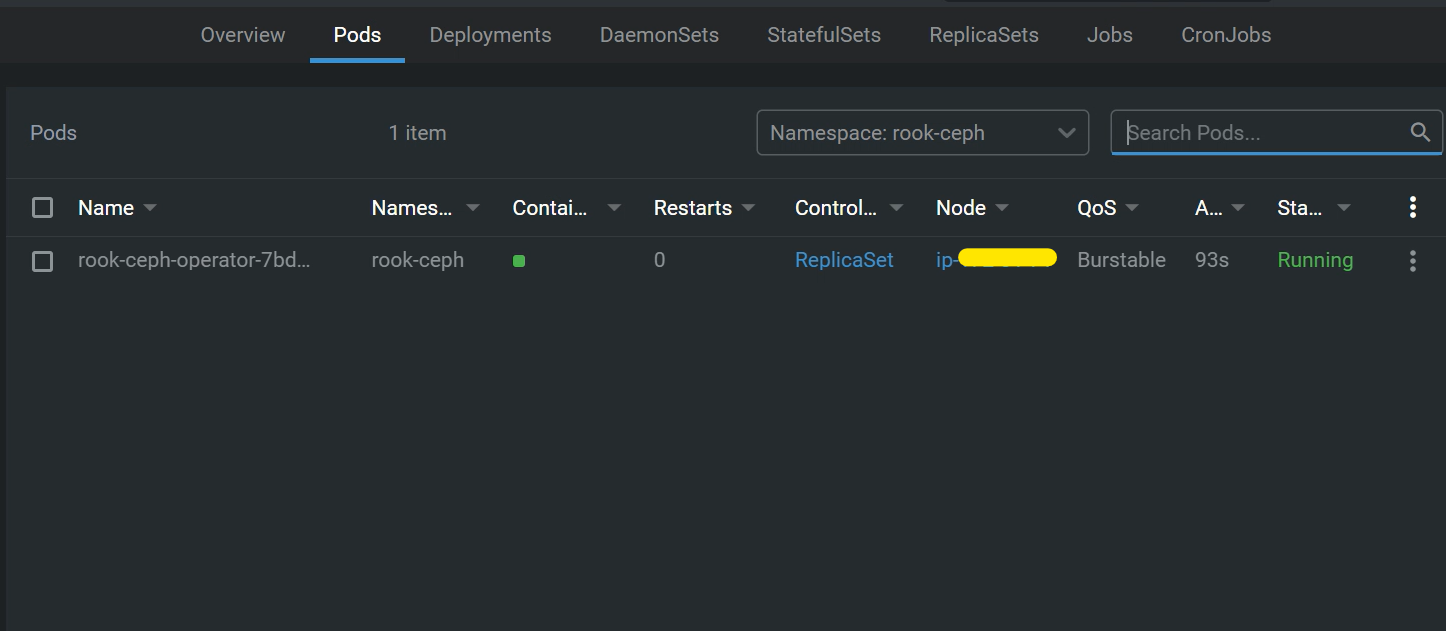

How to Install Rook-Ceph Operator Using Helm

To install the Rook-Ceph operator using Helm, you typically follow these steps:

- Add the Rook Helm repository:

helm repo add rook-release https://charts.rook.io/release

helm repo update

- Install the Rook-Ceph operator:

helm install rook-ceph rook-release/rook-ceph –create-namespace –namespace rook-ceph

- Verify the installation:

kubectl get pods -n rook-ceph

Now you can see the rook-ceph operator is ready deployed in rook-ceph namespace.

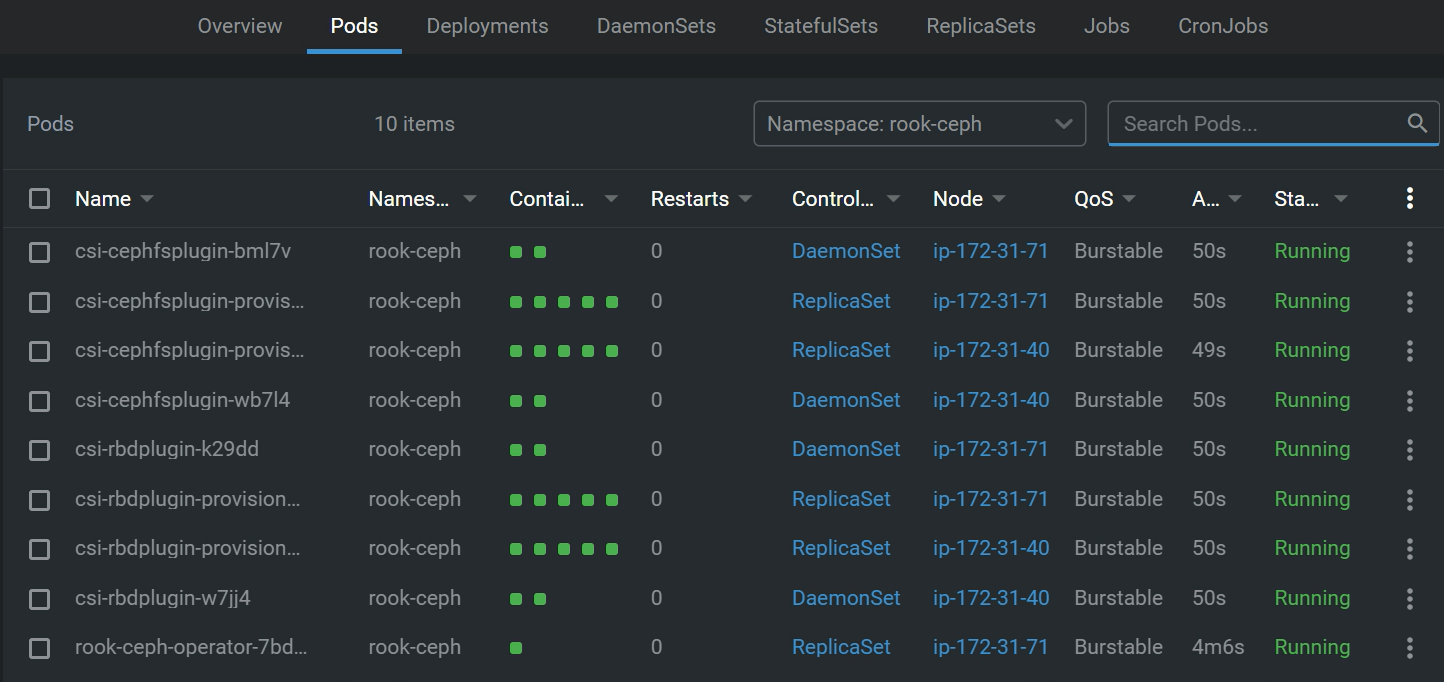

B. Rook-Ceph Cluster Helm Chart

The Rook-Ceph Cluster Helm chart is used to deploy and manage a Ceph storage cluster on top of the Rook operator. This chart configures the Ceph cluster according to the specifications provided by the user.

Key Components of the Rook-Ceph Cluster Helm Chart:

- CephCluster CRD: Defines the configuration and desired state of the Ceph cluster, including the number of monitors, OSDs, and other Ceph-specific settings.

- CephBlockPool, CephFilesystem, and CephObjectStore CRDs: Used to define and manage specific storage resources within the Ceph cluster.

- Storage Configuration: Specifies the storage devices and configurations to be used by the Ceph cluster.

How to deploy a Ceph storage cluster using the Rook-Ceph Cluster Helm chart

- Add the Rook Helm repository:

helm repo add rook-release https://charts.rook.io/release

helm repo update

- Install the Rook-Ceph operator:

helm install rook-ceph-cluster rook-release/rook-ceph-cluster –set toolbox.enabled=true –namespace rook-ceph

Rook Ceph vs. AWS, Azure, and GCP Storage Solutions

| Feature/Aspect | Rook Ceph | AWS | Azure | GCP |

|---|---|---|---|---|

| Unified Storage Solution | Block, file, and object storage | EBS: Block, EFS: File, S3: Object | Disks: Block, Files: File, Blob: Object | Persistent Disks: Block, Filestore: File, Cloud Storage: Object |

| Flexibility and Customization | High flexibility and customization | Fixed features, limited customization | Predefined tiers, limited customization | Predefined configurations, limited customization |

| Cost Control | Potential cost savings with commodity hardware | Predictable but can be expensive for high usage | Predictable but can escalate with usage | Competitive but can be costly for extensive use |

| Vendor Lock-in | Open-source, vendor-neutral | Tied to AWS ecosystem | Tied to Azure ecosystem | Tied to GCP ecosystem |

| Storage Density and Scalability | Scales horizontally, suited for large-scale deployments | Scales per service model, may need manual scaling | Scalable within limits, some automatic scaling | Scalable, some automatic scaling but may need manual management |

| Data Management Features | Advanced features like replication, erasure coding, automated recovery | Built-in features like snapshots, backups, limited control | Built-in features like snapshots, backups, limited control | Built-in features like snapshots, backups, limited control |

| Integration with Kubernetes | Native integration, dynamic provisioning | EBS, EFS, S3: Integration possible, additional configuration needed | Disks, Files, Blob: Integration possible, additional configuration needed | Persistent Disks, Filestore, Cloud Storage: Integration possible, additional configuration needed |

The comparison table above highlights the key differences and advantages of using Rook Ceph versus AWS, Azure, and GCP managed storage services.

Rook Ceph:

- Unified Storage Solution: Supports block, file, and object storage.

- Flexibility and Customization: Ideal for large-scale, persistent workloads and detailed control over storage configurations.

- Cost Savings: Utilizes commodity hardware, offering potential savings.

- Vendor Neutral: Open-source solution that avoids vendor lock-in.

AWS, Azure, and GCP:

- Managed Services: Offer fixed features and predictable pricing.

- Ease of Use: Designed for straightforward implementation.

- Limited Customization: Fewer options for tailoring to specific needs.

- Higher Costs: Potentially more expensive for extensive use.

- Vendor Lock-in: Tied to specific cloud ecosystems.

While these cloud providers offer scalable and integrated storage solutions with Kubernetes, they often require additional configuration and may not match the native support and comprehensive features provided by Rook Ceph.

Rook Ceph provides a unified storage solution supporting block, file, and object storage with high flexibility and customization, making it ideal for large-scale, persistent workloads and scenarios requiring detailed control over storage configurations. It also offers potential cost savings by utilizing commodity hardware and avoids vendor lock-in, being open-source and vendor-neutral. In contrast, AWS, Azure, and GCP offer managed services with fixed features, predictable pricing, and ease of use, but they come with limited customization options, potential higher costs for extensive use, and vendor lock-in. While these cloud providers offer scalable and integrated storage solutions with Kubernetes, they often require additional configuration and may not match the native support and comprehensive features provided by Rook Ceph.

Elevate Your Kubernetes Experience with Rook-Ceph

Rook-Ceph is a game-changer for Kubernetes users looking to simplify their storage management. By integrating the powerful Ceph storage system with Kubernetes, Rook-Ceph provides a seamless, scalable, and efficient storage solution.

Whether you are running a small development environment or a large-scale production system, Rook-Ceph can meet your storage needs with ease.

For those who prefer managed solutions, platforms like StackGenie offer Kubernetes services that can help organizations streamline deployment and management. With expertise in handling Kubernetes environments, it ensures your infrastructure is optimized, leaving you to focus on your core applications.

Take advantage of Rook-Ceph’s capabilities and elevate your Kubernetes storage management to the next level!

FAQ

1. Can we deploy Rook-Ceph manually without using a Helm Chart?

Yes, Rook-Ceph can be deployed manually without using a Helm Chart.

Here’s a simplified step-by-step guide:

Step 1: Clone the Rook repository:

git clone https://github.com/rook/rook.git

cd rook/cluster/examples/kubernetes/ceph

Step 2: Apply the common resources and Rook operator:

kubectl apply -f common.yaml

kubectl apply -f operator.yaml

Step 3: Configure and deploy the Ceph cluster:

- Create or modify the cluster.yaml file to define your Ceph cluster’s configuration.

- Then apply the configuration:

kubectl apply -f cluster.yaml

Step 4: Create storage classes:

Create a storageclass.yaml file and apply it to make storage available for your applications.

kubectl apply -f storageclass.yaml

Step 5: Use the storage classes in your Kubernetes deployments:

Define a PersistentVolumeClaim (PVC) and use it in your applications to request storage.

2. What happens if I don’t use Rook-Ceph for storage in Kubernetes?

Without Rook-Ceph, you would need to manually set up a complex storage system like Ceph. While Ceph is a powerful and scalable solution, it can be difficult to install, configure, and maintain. You’d have to manage multiple storage components and ensure they work together seamlessly. Additionally, you would need to manually connect this storage system to your Kubernetes cluster, which can add further complexity to your infrastructure management.

3. Can Rook-Ceph be used for all types of storage in Kubernetes?

Rook-Ceph supports block, file, and object storage in Kubernetes. This versatility makes it a comprehensive solution for managing different types of storage needs within Kubernetes clusters, whether it’s persistent volumes for applications or object storage for scalable data access.